About master theorem

Background

In ECE590, a horrible professor talked so little about the master theorem. I was so confused about it. Two months later, one of my friends at USC reminded me of this and I certainly cannot understand by this point. In this blog, some points will be clarified which I do not want to repeat this over and over again in the future.

Recurrence equation

In the merge sort algorithm, the recurrence equation is given by:

\(T(n) = T(\lceil n / 2 \rceil) + T(\lfloor n / 2 \rfloor) + O(n)\)

This should be the correct version, however, we can safely strip out the floors and ceilings (using a technique called domain transformations), giving us a simpler recurrence:

\(T(n) = 2T(n/2) + O(n)\)

More formally, the recurrence of the form:

\(T(n) = aT(n/b) + f(n)\)

characterizes a divide-and-conquer algorithm that creates \(a\) subproblems, each of which is \(1/b\) the size of the original problem, and in which the divide and combine steps together take f(n) time. So:

- \(a\): number of subproblems

- \(b\): size of subproblems in regards to the original problem

- \(f(n)\): divide and conquer steps together take f(n) time

Define Master Theorem

Let \(a \ge 1\) and \(b > 1\) be constants, let \(f(n)\) be a function, and let \(T(n)\) be defined on the nonnegative integers by the recurrence:

\(T(n) = aT(n/b) + f(n)\)

where we interpret n/b to mean either \(\lfloor n/b \rfloor\) or \(\lceil n/b \rceil\). Then \(T(n)\) can be bounded asymptotically as follows:

- If \(f(n) = O(n^{\log_ba - \epsilon})\) for some constant \(\epsilon > 0\), then \(T(n) = O(n^{\log_ba - \epsilon})\).

- If \(f(n) = \Theta(n^{\log_ba})\), then \(T(n) = \Theta(n^{\log_ba})\logn\).

- If \(f(n) = \Omega(n^{\log_ba + \epsilon})\) for some constant \(\epsilon > 0\), and if \(af(n/b) \le cf(n)\) for some constant \(c < 1\), and all sufficiently large \(n\), then \(T(n) = \Omega(f(n))\)

Here, there are one thing that is universal across the three cases, the is in each of the three cases, function \(f(n)\) is compared with the function \(n^{\log_ba}\). Why \(n^{\log_ba}\)? Or if the form changes to (which is taught at ECE590):

if \(T(n) = aT(n/b) + O(n^d)\) (for constants \(a > 0, b > 1, d \ge 0\) independent of n) then: \[ T(n) = \left\{\begin{aligned}& O(n^d) & d > \log_ba \\ & O(n^d \log_{}n) & d = \log_ba \\ & O(n^{\log_ba}) & d < \log_ba\end{aligned}\right.\]

An answer on Reddit here told me an intuitive way of understanding this, here the \(\log_ba\) is also called critical exponent:

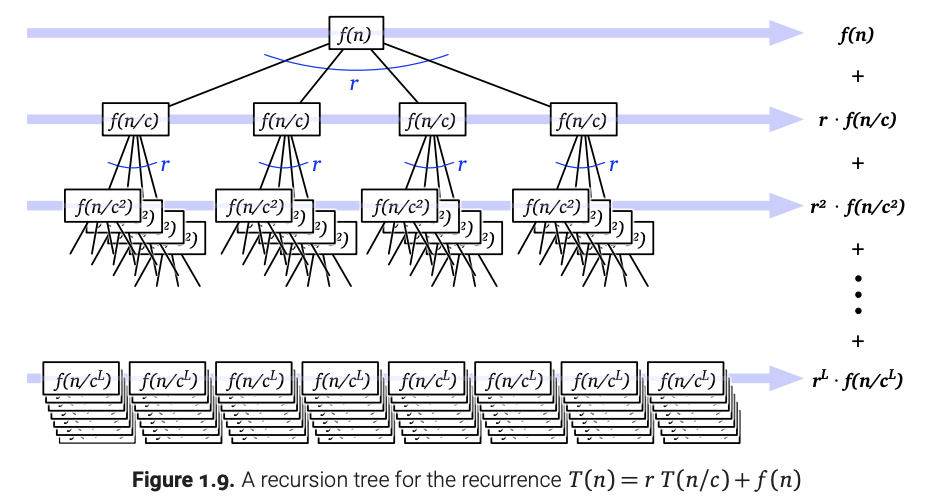

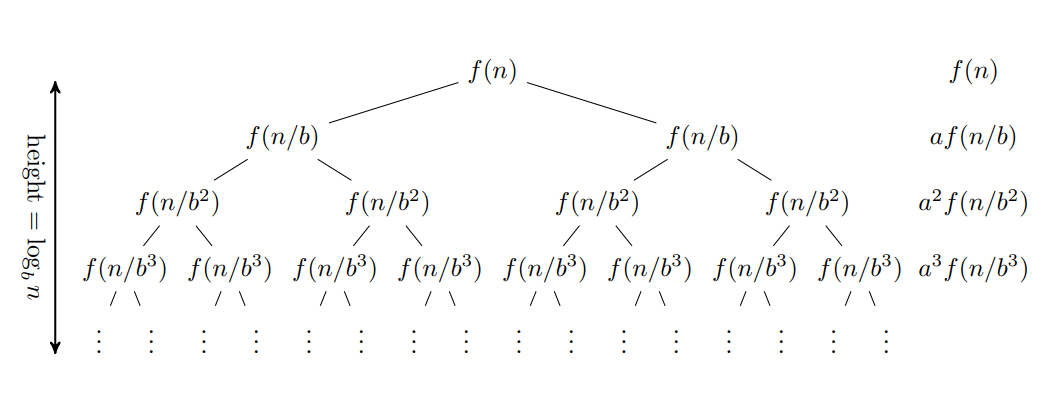

The intuition behind master theorem is that you imagine taking a specific input to the algorithm, then you visualize all the recursive calls it will make as one huge tree, and then you compute the total running time of the algorithm by taking each level of the recursion tree separately and summing up the work done while on that level. As you go down the recursion tree, the number of subproblems increases exponentially while at the same time the size of each subproblem and therefore also the work done in each subproblem decreases exponentially.

Then there will be two classifications:

- If the number of subproblems (\(a\)) grows faster, almost all work is done in the leaves, i.e., when solving the final calls that are handled in constant time.

- If the amount of work (\(b\)) decreases faster, you get the exact opposite: almost all work is done in the root of the tree (i.e., outside of all recursive calls) and the rest is negligible.

The critical exponent is exactly between those two: the subproblem count increases at the same rate at which the amount of work decreases. Then you get the same total amount of work on each level of the recursion tree, which means that your total time complexity is (the work done on any single level) times (the number of levels).

But why \(\log_ba\) and what is \(d\)? This is because we have not give a general solution to the general form of the recurrence function: \(T(n) = aT(n/b) + f(n)\). The solution for this is:

\(T(n) = \displaystyle \sum^{\log_bn}_{i=0} a^if(n.b^i) + O(n^{\log_ba})\)

This can be seen by drawing the tree generated by the recurrence. The tree has depth \(\log_bn\). There are \(a^i\) nodes at level \(i\), each labeled \(f(n/b^i)\). The value of \(T(n)\) is the sum of the labels of all the nodes of the tree.

The last term \(O(\log_ba)\) is the sum across the leaves, which is \(a^{\log_bn} \cdot f(1) = n^{\log_ba} \cdot f(1)\)

why \(a^{\log_bn} = n^{\log_ba}\)? \[ \begin{aligned} a^{\log_bn} &= (b^{\log_ba})^{\log_bn}\\ &= b^{\log_ba \cdot \log_bn}\\ &= b^{\log_bn \cdot \log_ba}\\ &= (b^{\log_bn})^{\log_ba}\\ &= n^{\log_ba} \end{aligned} \]

So now, if we intuitively think about this theorem again:

- if \(d > \log_ba\), then it means most of the job are done at the root level (considering the O(f(n)) is larger that leaves, which is \(O(n^{\log_ba}\))).

- if \(d = \log_ba\), then it means it is the same as the general solution and thus the time complexity is \(O(n^d\log_{}n) = O(n^{\log_ba} \log_{}n) = O(a^{\log_bn} \log_{}n)\) which means the height times the bottom leaves.

- if \(d < \log_ba\), then most of the job are done at the leaves.

References

About master theorem

https://jerry20000730.github.io/wiki/Algorithm/master_theorem/